Abstract: As U.S. counterterrorism strategy has shifted toward “risk-based prioritization” in an environment of constrained resources, terrorism risk assessment has become more critical, as the efficient allocation of resources becomes more crucial the margins for error decrease. Yet, existing approaches to risk assessment remain fragmented in both theory and practice. This article offers a primer and a bridge. It synthesizes a diverse literature on terrorism risk and provides a perspective on the strengths, limitations, and practical utility of various approaches, models, and concepts. Turning to practice, it provides a case study of the Department of Defense’s Joint Risk Analysis Methodology (JRAM) and proposes an operational Bayesian risk framework that integrates analyst priors, observable indicators, feasible courses of action, and explicit loss functions. This is complemented by a discussion focused on how data standards, automation, and modest AI applications can support rather than replace expert judgment. The conclusion outlines a future research agenda emphasizing bridges between individual and network-level risk instruments and systemic evaluation of past U.S. government risk assessment cases. It makes that case that if the United States is to remain serious about ‘risk-based’ counterterrorism, then terrorism risk assessment itself must be modernized conceptually, institutionally, and technologically to match the complexity and dynamism of the threat it seeks to understand.

The assessment of terrorism risk has always been important to U.S. counterterrorism strategy, especially since 9/11. But over the past several years, as the United States has been navigating a shift in counterterrorism as a priority—a move that has affected U.S. CT posture abroad and the resources available for CT—the issue of terrorism risk has become even more central. An important reflection of this change is found in National Security Memorandum 13 (NSM-13), the Biden administration’s central CT strategy document, which employed “a risk-based prioritization framework to inform policy decision-making and resourcing to ensure focus on our highest-priority CT objectives.”1 The shift has also been highlighted in statements made by senior counterterrorism officials. For example, in 2023, Nicholas Rasmussen, who was then serving as the Department of Homeland Security’s Counterterrorism Coordinater, noted how: “As a result of diminished forward-deployed resources and government attention, the counterterrorism strategy focuses more on risk management and risk mitigation.”2 In early 2025, senior CT officials in the Trump administration called attention to similar dilemmas: how the “threat from global jihadists has expanded significantly, although the resource to counter them have declined.”3 In practice, this has meant that the United States has needed to be even more careful, calculating, and deliberate in terms of how it evaluates terrorism risk, as the diminishment of CT resources and focus has narrowed the margin of error. It has also meant, at least in some cases, that the United States has had to be more risk accepting.

Despite the central, and growing, importance risk assessment plays as a pillar of U.S. CT strategy, there is not a lot of developed work that discusses how the U.S. government, and specific components, approach terrorism risk in practice. Indeed, while the literature is strong in theory, it offers much less insight into the real-world tradeoffs and limitations of key models, how they can be practically implemented, and how they can evolve to meet and keep pace with today’s complex and dynamic terror threat landscape.

This article aims to enhance understanding of terrorism risk and advance conversations about the practice, and evolution, of terrorism risk approaches. It is part primer and part bridge, as it tries to show how theory connects, or at least intersects, with practice. It starts—in Part 1—by taking high-level stock of the literature. It offers a perspective of what practitioners should take away from the literature, including a discussion of key concepts and models and related strengths and weaknesses of different approaches. Part II bridges to practice and includes a short case study of the primary and strategic approach that the Department of Defense uses to evaluate risk: the Joint Risk Analysis Methodology. Part III contains a discussion of how Bayesian risk calculations can be operationalized and used to assess terrorism risk, and how different sources of data, artificial intelligence, and automation can also be integrated into that type of approach. The article concludes with thoughts about next steps—future areas of research and how terrorism risk approaches can evolve in the future.

Part I: Terrorism Risk in Theory – Definitions, Key Approaches, and Takeaways

This section provides a general overview of the terrorism risk literature with emphasis placed on categorizing the corpus, highlighting important findings, and discussing key models, concepts, and approaches that have been developed to evaluate terrorism risk. This latter part, which is the focus of the second half of Part I, includes a discussion about the general utility, strengths, and limitations of key approaches for practitioners in today’s environment.

The Terrorism Risk Literature – Collections, Areas of Coverage, and Key Findings

The terrorism risk literature base is a corpus of work that includes more than 60 articles and reports that the authors identified and reviewed. While this corpus includes different perspectives on how terrorism risk should be defined, the literature orients around terrorism risk being defined as a function of threat, consequence, and vulnerability. A core pillar of the corpus is the presentation of methods to model and evaluate terrorism risk, and debates about various approaches, which are explored in the next sub-section.

Articles in the corpus explore different types of terrorism risk. One important dividing line is the unit of analysis. For example, many articles explore terrorism risk through the lens of groups or networks.4 This includes a subset of articles that examine risk through the lens of specific types of terror organizations or extremists motivated by different ideologies.5 An important finding from a study of the behavior of nearly 400 terror groups active between 1968-2008 found that “the production of violent events tends to accelerate” as groups increase in size and experience.6 As noted by the authors of that study:

This coupling of frequency, experience and size arises from a fundamental positive feedback loop in which attacks lead to growth which leads to increased production of new attacks. In contrast, event severity is independent of both size and experience. Thus larger, more experienced organizations are more deadly because they attack more frequently, not because their attacks are more deadly, and large events are equally likely to come from large and small organizations.7

This group-level view is contrasted by a developed sub-field, represented by a cluster of articles that focus on the risks posed by individual extremists or lone-actor offenders. This collection of articles has a strong practical orientation, as these works either present frameworks or use real-world data to examine the utility of instruments that have been developed to identify individual radicalization and terrorism risk mobilization factors.8 For example, the literature discusses at least nine risk and threat assessment instruments developed for violent extremism.9 Articles in this collection evaluate various instruments, such as the Extremism Risk Guidance 22+ (ERG22+) formulation tool, which was developed to assess “risk and need in extremist offenders;”10 the Terrorist Radicalization Assessment Protocol-18 (TRAP-18), “an investigative framework to identify those at risk of lone actor terrorism;”11 and the Detention of Violent Jihadists Radicalization (DRAVY-3), an instrument that was designed “to assess the risks of violent jihadist radicalization in Spanish prisons.”12 The latter study, for example, evaluated a “pilot form of the DRAVY-3 … [that] was filled in, in April–May 2021 in fifty-six Spanish penitentiary centres” including data on 582 inmates involving 63 indicators: 20 for violence, 21 focused on radicalization, nine oriented around changes in habits, seven ethnographic considerations, and six other variables.13 These data-driven studies complement other academic research14 and efforts by governments15 and technology platforms16 to better understand the behaviors of individuals involved in terrorism, to surface extremist content, and to refine risk and mobilization indicators. Insights and lessons learned from the development to practical implementation of these various instruments would also likely be useful inputs to help evolve group and network focused terror risk approaches.

Another important dividing line in the literature is the distinction between articles that examine terrorism risk through a more general lens versus those that focus on specific dimensions of terrorism risk. For the latter category, this includes articles that explore terrorism risk through specific types of targets, such as commercial aviation17 or critical infrastructure,18 or specific weapons or types of terror attacks, such as terror incidents that involve use of chemical agents.19 It also includes articles focused on terrorism risk insurance programs and specific types of events, such as large scale—similar to 9/11—terror attacks that, while more rare, can generate more devastating consequences.20 These types of rare but extremely high severity events can be hard to anticipate and predict, and they complicate the scope of what a terror risk model needs to consider and cover.

A collection of the more focused articles explores temporal terrorism risks, spatial dynamics, or contextual factors through the lens of a specific geographic area (e.g., a region, country, or city).21 For example, the collection includes an article that empirically examines the dynamics of terrorism risk in three Southeast Asian countries;22 articles that provide separate data-driven profiles of Israel23 and Pakistan;24 a study on radicalization risk factors in the United States; 25 a comparative evaluation of terrorism in the United States, United Kingdom, and Ireland; 26 and a study that examined how “country characteristics affect the rate of terrorist violence.”27 Geography is also a key point of orientation for a terrorism risk assessment of historic urban areas in Europe.28

These more granular studies provide some helpful takeaways about the temporal and geographic determinates and dynamics of terrorism risk. For example, an empirical study of terror attacks in Israeli from 1949-2004 found—perhaps not surprisingly—that terrorists were “more likely to hit targets more accessible from their own homebases and international borders, closer to symbolic centers of government administration, and in more heavily Jewish areas.”29 An examination of “waiting time between attacks” also revealed that in the Israeli context, long “periods without an attack signal lower risk for most localities, but higher risk for important areas such as regional or national capitals.”30 The quantitative study of terror incidents in Indonesia, the Philippines, and Thailand examined “patterns of terrorist activity in terms of three concepts: risk, resilience and volatility,” and it did so by leveraging a self-exciting model: how “the occurrence of a terrorist event “excites” the overall terrorist process and elevates the probability of future events as a function of the times since the past events.”31 These concepts could be used by the United States and other governments to refine and better model terrorism risk, especially in select areas.

The literature can also be broken down and organized by method or an article’s purpose. This includes four primary categories. First, the majority of articles in the corpus focus on theoretical frameworks and debates about how to approach and model terrorism risk. These range from discussions about more common approaches, such as probabilistic risk assessment, to a model designed to help prioritize anti-terrorism measures, to more boutique approaches. Articles that assess terrorism risk approaches or that aim to evaluate specific terrorism risk mitigation initiatives or programs are a second category. RAND has been a principal player in this space since the early 2000s. While RAND’s work covers different aspects of the topic, it has done a considerable amount of work focused on the intersection between homeland security and terrorism risk.32 A third category are articles that examine terrorism risk through case studies.

The final category are articles that discuss how technology, computational approaches, or artificial intelligence can be used to inform or augment terrorism risk approaches. This final category included, for instance, an article focused on the use of Natural Language Processing (NLP) to evaluate “whether the language used by extremists can help with early detection of…risk factors associated with violent extremism.”33 Another important article discussed “the challenges and opportunities associated with applying computational linguistics in the domain of threat assessment.”34 While not directly focused on terrorism risk, another contribution advances efforts to develop “automated, accurate, and scalable [terror attack] attribution mechanisms” by leveraging terrorism incident data to evaluate the performance of “ten machine learning algorithms models and two proposed ensemble methods” focused on the task.35 These studies highlight how artificial intelligence approaches and tools can be used to derive new or additional insights from different types of data—and do so at both scale and speed.

April 2007. (Courtesy photo/U.S. Department of War)

Key Theories, Concepts, and Approaches

The literature also introduces and discusses key theories, models, and concepts that are used, or could potentially be used, to evaluate or understand terrorism risk. This subsection explores key approaches and concepts that are discussed and debated in the literature, with emphasis placed on their strengths, limitations, and practical utility. This discussion is not meant to be an exhaustive list of ideas and methods, but rather an overview of concepts and approaches that the authors believe are important to highlight.36

Foundations: Explaining the Statistical Definition of Risk

Along with the empirical notion of risk, there is also a statistical definition that is germane to the discussion of terrorism risk. In statistical decision theory, risk is defined as the average loss that is incurred based on the decisions that you would make. To accurately measure this, we would need to understand how the data manifests given a parameter, what our possible action space would be, and how we will measure loss.37 For the purposes of calculating terrorism risk, we can think of the parameter as representing the various states of nature that may be true. For instance, one state of nature might be that there is an inactive sleeper cell in a country, another state is that the cell has already begun to plan attacks, and another may be that there is no threat at all in the country. Note that the mathematical or statistical notion of risks seeks to optimize the best action to take—that is, when we discuss risk, we are not talking about threat but rather talking about residual threat given we decide to act in a variety of ways.

To put this in more concrete terms for a terrorism analyst, to accurately measure risk, we would need to understand what we would expect to observe in a location at a given terrorist threat level. For a statistician, this would be the probability distribution given a parameter, or in other words how the data would manifest given a parameter. We would next need to understand what our possible responses to the situation could be. For example, some actions could be, surge ISR assets, increase soldier presence, or simply do nothing. For a statistician, this would be defining the action space. The final piece that would be necessary is an understanding of what the possible loss would be. For instance, if we think that we are in an area of high terrorist threat and we choose to do nothing, our expected loss would be higher than if we are in an area of low terrorist threat and choose to do nothing. This, for a statistician, would be the loss function.

While this framework is relatively straightforward, the most important distinction is that a risk function is defined for an action, not for a location. We measure risk according to the average loss for a given action not for a location. Further, to accurately assess risk it is necessary to accurately define the probability of events occurring at different threat levels, capture the action space of possible responses, and quantify the loss for various actions at given threat levels. While the mathematics behind these calculations are relatively straightforward, properly quantifying these measures are often not.

With a few exceptions, the vast majority of the mathematical literature focuses on addressing only a subcomponent of what is needed to measure risk. Typically, the literature addresses the probability of an action occurring. While this is a necessary step in calculating risk, as detailed in Part III it is not sufficient.

Probabilistic Risk Assessment

Probabilistic risk assessment is similar to the mathematical definition of risk in that it seeks to quantify the likelihood of an event and the consequence of that event occurring. The formula is Risk = Probability X Severity.a While the formula is referred to as probabilistic risk assessment, the risk calculation does not return an actual probability. However, risk can be quantified and different regions can be compared by using this calculation. As opposed to the mathematical definition of risk, the probabilistic risk assessment does not take into account the observer’s actions. That is, risk in this context is assumed to exist regardless of what mitigating measures may be employed. This formula, though, is likely appealing to many operational units as it is simple to construct and easily explained. The simple nature, though, hides a multitude of assumptions or questions that must be answered. Specifically, how is the probability of an event occurring calculated and what is meant by severity. While often severity is calculated through loss of human lives or monetary cost, the probability of an event occurring is typically either handled through an analyst’s “best guess” or obfuscated through a further complex mathematical formula that hides other assumptions. Some criticism of PRA has focused on the inadequacy of PRA to hedge against the different probabilities that attackers may eventually act upon. That is, attackers may make a choice to attack in a manner that is unexpected, simply because that manner of attack is unexpected.38 While this certainly could be true, this also would assume that the attackers knew how the defenders, or the analysts, were assigning probabilities in the first place. The argument then becomes tautological, leading to a conclusion that no probabilities should be calculated. Typically, this line of thought ends in a qualitative risk assessment; however, we argue the qualitative risk assessment is nothing more than an informed prior distribution, leading to the conclusion that we should leverage more Bayesian methods in our risk calculations.

Bayes Risk

As alluded to above, the issue with a strict probabilistic risk assessment is that the data is often not available to quantify the likelihood of an event occurring. So, analysts often make their best-informed guess on the likelihood of an event occurring. However, if the probability of an event occurring is calculated through an analyst’s best guess, or prior belief before data convinces them otherwise, the formula really then belongs in the class of Bayesian risk calculations. A Bayesian risk calculation is a subset of a probabilistic risk assessment where the concept of mathematical risk as defined above is extended through incorporating an analyst’s prior belief. This allows for calculations to occur in the absence of data but also updates when data becomes available. This has made Bayesian risk approaches useful, as when “the situation changes, they are easy to update; as the evidence changes, the posterior probability changes.”39 Bayesian networks also hold utility for risk communication, as “they show all the prior and marginal probability distributions of the risk results.”40 Bayes approaches have been used “in the development of anti-terrorism modeling” and “to predict distribution for lethal exposure to chemical nerve agents such as Sarin.”41 While Bayesian inferential techniques have been criticized as relying on the availability and completeness of data,42 these techniques do naturally blend qualitative analysis (in the form of a prior belief) and can still provide insight when data are limited as is sometimes the case in assessing terrorism risk.

Mathematically, a Bayesian risk calculation incorporates a prior probability that is placed upon the parameter that is used in the classic risk calculation. The formula for Bayes Risk of an action is calculated through summing up the loss occurring if the action is performed given the various states of nature are true times the likelihood the various states of nature are true times the prior belief that that state of nature is true.b

Game-Theoretic Approaches

Another method discussed in the literature is game theoretic approaches, which provide a way to study “multi-agent decision problems.”43 Approaches informed by game theory have been proposed because terrorism is shaped by interactions between players, especially a terror network and a CT entity,44 and game theory can help model how terror attackers—an intelligent adversary—may adapt to counterterrorism actions.45 The motivation has also been driven by the view, expressed by some, that “probability is not enough” to measure terror risk.46 But instead of game theory being viewed as the central tool to evaluate terrorism risk, the literature primarily discusses how game theoretic approaches could be useful as a “decision tool in counterterrorism risk assessment and management,”47 and as a way to improve “current risk analyses of adversarial actions.”48

Security-focused games have been utilized for “many real world applications,” which intersect with the problem of terrorism. For example, “game-theoretic models have been deployed” to support: “canine-patrol and vehicle checkpoints at the Los Angeles International Airport, allocation of US Federal Air Marshals to international flights, US Coast Guard patrol boats, and many others.”49

While game-theoretic approaches appear to hold some promise for terrorism risk assessment, their use is also complicated by limitations and tradeoffs. For example, a “major challenge” in models used “is their reliance on complete information and full rationality assumptions, which may not hold in practical security settings where attackers operate under uncertainty and defenders face information asymmetries.”50 Unfortunately, the ‘world’ of information asymmetries is usually the environment that CT practitioners need to live and act in. So, while “game theory will tell you how the game should be played,” it might not tell you “how it will actually be played.”51 As noted by Ezell et al., the use of game theory may thus “lead an analyst to gain some unexpected and interesting insight into the terrorism problem, which other techniques fail to provide,” but a key danger is that insight “could be for the wrong game in the first place.”52

Richardson’s Law and Power Law Distribution

Famous English mathematician and scientist Lewis Fry Richardson found that the “relationship between the severity of war, measured by battle deaths, and the frequency of war”53 followed a Power law distribution. This type of probability distribution is considered a heavy-tail distribution and is used to model situations where large events are rare while small events are common. It has been used to model and describe a diverse mix of extreme events such as earthquakes, solar flares, and stock-market collapses,54 and to understand ‘black swan’ events.

It has also been used to describe, and explain, certain dynamics of terrorism. For example, research by Aaron Clauset, Maxwell Young, and Kristian Skrede Gleditsch, found that the “apparent power-law pattern in global terrorism in remarkably robust”55 and that it “persists over the past 40 years despite large structural and political changes in the international system.”56 Clauset and Ryan Woodard have used Power law to evaluate the historical and future probabilities of large terror events, such as 9/11.57 Research by Stephane Baele also suggests that “heavy-tailed, power-law types of data distribution” are ubiquitous “in all major dimensions of digital extremism and terrorism.”58

Hawkes Point Process Model

Another important concept discussed in the literature is the Hawkes Point Process Model.59 The Hawkes process is a self-exciting point process. In the context of terrorism, “self-exciting models assume that the occurrence of a terrorist event ‘excites’ the overall terrorist process and elevates the probability of future events as a function of the times since the past events.”60

This idea is intriguing as it may help to model a key aspect of dynamism that influences terrorism today: how some acts of terrorism by individuals or groups help to ‘spark,’ ‘ignite,’ or provide motivation for other acts of terrorism. This is not a theoretical problem. Indeed, as noted by White, Porter, and Mazerolle, “although terrorist attacks might appear to occur independently at random times, a sizeable body of theoretical and empirical research suggests that terrorist incidents actually occur in non-random clusters in space and time.”61 While these three researchers use the Hawkes process to evaluate terrorism patterns in three Southeast Asian countries, research by other scholars highlight where a ‘self-exciting’ dynamic appears to be playing an important role. For example, an examination of vehicular terror attacks found “the demonstration effect created by high-casualty vehicle-ramming attacks has in the past seemingly produced a surge in copycat attacks.”62 More concrete evidence can be found in the world of far-right terrorism. For years, researchers have documented a pattern of behavior, what has been framed as the ‘cumulative momentum of far-right terror’: how individual terror attackers are influenced by, and seek to build off the momentum, sparked by prior attacks, with the 2019 deadly terror attack in New Zealand being a key catalyst.63

While the ‘self-exciting’ dynamic exists in some areas, additional research illustrates how it does not appear to exist in others. For example, a data-driven study of terrorist suicide-attack clusters “did not uncover clear evidence supporting a copycat effect among the studied attacks.”64 This suggests that while the Hawkes process holds some utility to understand the modern dynamics of terrorism risk, its utility may also be limited to certain types or categories of threats.

Structured Professional Judgement

Another method, structured professional judgement (SPJ), is viewed by some researchers as “the current gold standard for assessing and managing violence risk”65 and “the best practice approach for assessing terrorism risk.”66 As noted by Dean and Pettet:

The “SPJ” methodology arose as a compromise position between two disputing camps. The first camp, “unstructured clinical judgement,” relies on professional expertise in collecting, aggregating, and interpreting data. The second camp, “actuarial assessment,” “strives to achieve empirically accurate classifications by replacing clinical judgment with validated instruments and algorithms.”67

SPJ is an attempt to blend these two approaches. The result, at least in theory, “is an evidence-based approach that combines empirically grounded tools with professional judgment.”68 For example, this can take the form of experts being asked through a standardized process, such as a survey or checklist, to rate or score an individual or group in relation to defined risk factors or criteria and established risk level categories (e.g., low, moderate, high). To help standardize inputs, the assessor can “be trained in a “calibration” exercise … to ensure an adequate level of consistency is obtained in rating each risk indicator item.”69 After inputs are received, the professional judgementsc from each expert can then be weighed and combined to provide a composite risk rating, which can be assessed in relation to a larger collection of responses to develop a summary risk score.

SPJ is widely used to assess the risk of violence in various areas, from terrorism and extremism, to stalking, domestic violence, and sexual violence.70 SPJ, for example, informs and is used as a part of the Department of Defense’s Joint Risk Assessment Methodology. It is also used as a part of the Violent Extremist Risk Assessment tool,71 the TRAP-18 investigative framework,72 ERG22+,73 and other similar instruments.

One core challenge of SPJ is “how best to deal with the subjectivity inherently involved in professional judgement.”74 This is because SPJ tools are “not as ‘objective’ a process as some suggest.”75 While SPJ approaches provide a structured process that can help to control subjectivity, there will always be some “inherent ‘subjectivity’ of an analyst’s professional judgement.”76 Dean and Pettet discuss ‘controlling out’ and ‘controlling in’ as two different approaches to try and manage this subjectivity challenge.77

SPJ-driven approaches are clearly valuable, but they should also be used with care. Integrating expert views into terrorism risk approaches is a source of strength, but SPJ approaches on their own do a poor job of highlighting individual or collective biases, and it seems prudent that it would be helpful to leverage other data to either validate expert views or to identify areas of divergence—so those can be explored.

Risk Terrain Modeling

Several researchers have used risk terrain modeling (RTM) to evaluate and better understand terrorism risk in specific locales, such as a city. Developed by Joel Caplan and Leslie Kennedy in 2009, RTM is a method to examine the spatial dynamics of crime, to “identify the risks that come from features of a landscape and [to] model how they co-locate to create unique behavior settings for crime.”78 d The approach has drawn the attention of some scholars because research “consistently demonstrates crime is spatially concentrated,”79 and since terrorism is a type of crime, it is worthy of exploration.

There has been a small collection of academic studies that use RTM to evaluate the spatial dynamics of terrorism risk. This includes the use of RTM to evaluate how geographic space, risk factors, and terrorism intersect in places such as Istanbul, Belfast, and New York.80 Research focused on RTM has been complemented by other work that centers ‘place.’ Two sets of scholars, for example, have developed frameworks to help navigate how acts of terrorism, and terror decision making, intersect with place. This includes the EVIL DONE terrorism risk framework “for assessing the desirability of targets based on eight criteria”81 developed by Clarke and Newman and the TRACT framework, created by Zoe Marchment and Paul Gill, that identifies five factors that shape the spatial decision-making of a terrorist actor.82

Like other approaches, RTM has strengths and weaknesses. Two key strengths is that “RTM as an overall approach is relatively simple and user-friendly, and the associated RTMDx software provides an opportunity for practitioners to readily utilize the approach with minimal resources and time spent on learning new processes.”83 Another strength, according to a systematic review of the method by Zoe Marchment and Paul Gill, is that “RTM has been successful in identifying at risk places” for various crimes, including terrorism.84 But, as Marchment and Gill also note:

A key limitation of RTM is that it does not address temporal variations in crime locations (over the course of day, duration of a week, over different seasons, etc.) Another limitation of RTM in general is that it may identify areas as being risky where crime may never emerge. It cannot be assumed that because a location is high in risk according to identified risk factors, that crime will always ensue—there can be numerous areas identified as risky, but no crime may actually occur in these defined risky areas.85

The detailed data requirements of RTM would also limit its value and utility in various counterterrorism contexts, especially those focused on broader areas, remote locations, or under- or not-well governed terrain that do not have granular or reliable geolocated environmental or societal data.

Conjunctive Analysis of Case Configurations

The Conjunctive Analysis of Case Configurations (CACC) is another method that—like RTM—has its roots in criminology and crime prevention, but that has also been explored through the lens of terrorism.86 CACC, a multivariate method, “is an analytical technique for identifying whether certain variables are causally related to an outcome while simultaneously accounting for other measures of interest.”87 It can also be used to test hypotheses and explore data patterns and causal relationships.88 The method “begins by developing a data matrix, referred to as a truth table, consisting of all possible combinations, or interactions, of the variable attributes.”89 The truth table is then leveraged to create counts to “help identify key incident characteristics, their relationship with each other, and the frequency in which they are included in dominant configurations.”90 The method has been used to assess radicalization risk in the United States91 and similarities between domestic types of terrorism in the United States, United Kingdom, and Ireland.92

When it comes to terrorism risk and terror prevention, scholars have argued that approaches like CACC and RTM are useful as they provide context and can be used to break down the dynamics of risk and the multitude of factors that help drive it in specific areas. Or put another way, the two methods can help to ‘color in’ the general picture of risk that statistical approaches offer. As noted by Jeffrey Gruenewald and his co-authors, CACC and RTM can elucidate terror opportunity structures and help to bridge this gap:

Another limitation of prior statistical research on risk of terrorism attacks occurring is the overreliance on statistical main effects models that tell us how single variables increase or decrease the likelihood of terrorism occurring. Useful in their own right, these approaches cannot capture complex spatial risk profiles of various terrorism-related activities, specifically the amalgamation of factors shaping opportunities situated within unique socio-political contexts that are more or less conducive for terrorists to reside, plan and prepare, and commit attacks.93

But, like RTM, CACC requires developed, granular, and reliable data, which limits where and when it can be used.

By providing an overview of literature that focuses on terrorism risk, and highlighting key models, approaches, and concepts, Part I aimed to illuminate a set of options—aligned with empirical and theoretical research resources—for practitioners to examine and consider. The review of the diverse and scattered literature base revealed that there are primary approaches, such as probabilistic risk assessment and structured professional judgement, that are commonly used, and that hold broad utility to evaluate terrorism risks. The review also revealed several other methods that can be used for specific use cases or geographies, when there is a need to better understand and model offender-defender interactions, or to identify important contextual markers and/or the extremism and radicalization risk factors of individuals (and correlations of those factors). Key concepts, such as Power Law and Hawkins Point Process, also hold utility as they help to explain certain dynamics of terrorism, and related aspects of terror risk.

But the literature also highlighted divides and gaps. Indeed, one of the most interesting takeaways was the dividing line that exists between individual and group or network-based terrorism risk approaches, and how the instruments that have been developed to model and evaluate the risks posed by individuals are not only more developed—they also appear to be better evaluated. The comparative richness of the academic debate and discussion about those types of instruments and the dynamics of individual extremism risks was also quite stark. As for gaps in the literature, a primary one is how different risk assessment methods can be leveraged to complement one another and evolve terrorism risk as an area of practice.

Part II: Terrorism Risk in Practice: The JRAM as a Case Study

This section explores terrorism risk in practice through the lens of an approach—the Joint Risk Analysis Methodology, the JRAM—that has been used by the Departments of Defense.94

Before examining that approach, it is helpful to get a view of the role terrorism risk assessment plays in national strategy, and how approaches utilized by U.S. government agencies come together, or filter up, to affect that strategy. NSM-13, a key document that guided the Biden administration’s CT approach, provides a useful window into the issue. That document outlined the strategic role that terrorism risk assessments play in guiding U.S. CT strategy. This can be seen in how NSM-13 committed the United States to using a “risk-based prioritization framework to inform policy decision-making and resourcing to ensure focus on our highest-priority CT objectives.”95 NSM-13 also revealed how terrorism risk was being assessed at a strategic level: It was defined “as a function of terrorist intent and capability (i.e., threat) to target the Homeland or persons or facilities overseas exposure or vulnerability and the willingness and capability of host-country governments to mitigate terrorist threats within their borders.”96 Another important detail discussed in the document is the cadence of terrorism risk reviews by senior U.S. government figures. NSM-13 outlines, for example, how the National Security Council-led Counterterrorism Security Group will meet quarterly to “assess and update, as necessary, prioritization guidance based on shifts in terrorism risk or other policy decisions.”97 While NSM-13 does not speak to these details, it seems likely that during those meetings, representatives from different U.S. departments discuss their agency’s own terrorism risk findings, and any important changes that have transpired since the last meeting.

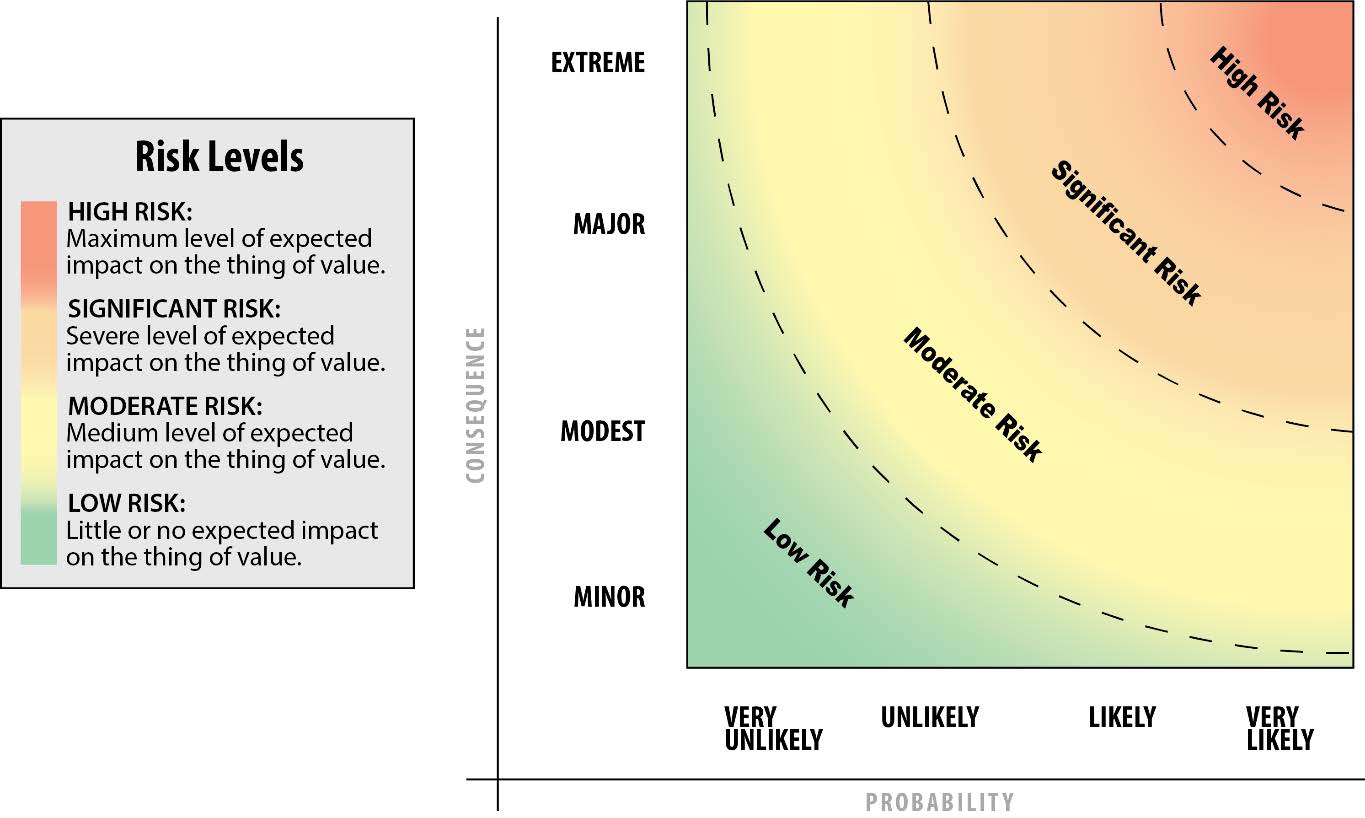

The JRAM, which is produced by the Joint Staff, is the central document that strategically guides the Department of Defense’s approach to terrorism risk. The first version the JRAM was published in 2016 “to promote a common risk framework and lexicon to the joint force.”98 The JRAM include elements focused on risk appraisal, risk management, and risk communication. According to the JRAM, “effective risk assessment” should evaluate risk in relation to three elements: “harmful event, probability, and consequence.”99 While the JRAM defines risk as a function of those latter two elements—probability and consequence—it proposes that those two elements be viewed, and assessed, through the ‘lens’ of a harmful event.100 This includes, as part of that process, the “identification of the source(s) and driver(s) or risk that may increase or decrease the probability or consequence.”101 For certain DoD components, sources or risk are identified through a threat survey, and drivers of risk are identified through an “OPS Survey,” which both leverage the knowledge of experienced practitioners and experts.102 Then, after the sources and drivers of risk have been identified, “the expected probability and consequence of the harmful event” are determined.103

The formula and approach used by one DoD component is as follows: the operations indicator (derived from the OPS Survey) is subtracted from the threat survey indicator. This leads to a heuristic value indicator defined by four categories:

Category 1: Operation pressure exceeds posed threats

Category 2: Operation pressure able to mitigate threat

Category 3: Threat exceeds operation ability to mitigate

Category 4: Threat overwhelms mitigation or is unchecked104

These categories are used to estimate probability. The probability (1-4) is then multiplied by a consequence value that is assessed in relation to four defined categories: 1) minor, 2) modest, 3) major, and 4) extreme.105 Examples of terror events that would align with each category include 1) USS Cole bombing, 2) U.S. withdrawal from Lebanon after the Marine barracks bombing in 1983, 3) 9/11, and 4) an existential threat, such as a large scale WMD attack.106 The multiplication of the assessed level of probability and consequence results in a risk value that ranges from 1-16 that associate with four baseline risk levels: low, moderate, significant, and high levels of risk.107

According to the JRAM, the final step in the risk characterization process is the plotting of the probability and consequence findings onto a risk contour that features the four baseline risk levels (see Figure 1 below for an example).108 While the exact plotting of those findings on the risk contour is in part subjective, the contour is useful in that it helps to visualize the level of risk in a simple, easy to understand way.

The JRAM approach is driven by structured professional judgement, and it has various strengths and limitations. The approach places emphasis on the experience and expertise of practitioners to assess terrorism (and in different contexts other forms) of risk, and it quantitatively characterizes risk through a structured process that situates qualitative and subjective expert inputs within a quantitative risk categorization framework. In that way, as noted by the JRAM, “Risk appraisal is fundamentally a qualitative process incorporating and informing commander’s judgment while quantitatively expressing probability and consequence when appropriate.”110 The JRAM’s reliance on practitioner experience is a core source of strength, as specialists that ‘live’ the terrorism problem set on a daily basis, and who have access to different types of intelligence, especially granular data, are very well postured to understand and evaluate the types of risks that terror groups pose, and to identify changes in behavior. Another key strength lies in the structure and standardization that the JRAM provides to make sense of qualitative, expert inputs. This helps commanders and other decision makers to evaluate a collection of expert inputs as a whole or in relation to one another so areas of convergence or divergence can be identified and interrogated.

But the JRAM also has limitations and downsides. While the emphasis that the JRAM places on expert inputs is well placed, it is not clear how the JRAM process controls for—or attempts to minimize—subjectivity, including biases and assumptions. For example, as noted by Michael Mazarr: “What judgments, assumptions and outright guesses had to be made in order to produce a given level of risk? How many were close-run findings that could easily have gone the other way?”111 The danger, as Mazarr highlights, is that:

Too often risk assessments have involved subjective judgments used to generate color-coded assessments without sufficient detail on their assumptions. Such singular verdicts (“moderate risk”) can offer leaders the opportunity to close their minds when any good risk process ought to be doing just the opposite—be very clear about the assumptions and nuances behind the results to force senior leaders to discuss and debate key issues.112 e

These are not theoretical concerns. These challenges are also not limited to the individual level, the responses or views of one or a few individuals; they can manifest on a collective level as well, and lead to broader problems.f For example, an article featured in this publication last year examined how the U.S. intelligence community and CT enterprise failed to accurately assess, despite indicators, that al-Qa`ida in the Arabian Peninsula (AQAP) had the intent and was seeking to attack the United States, an oversight which led to the United States being surprised by AQAP’s attempt to down an airliner over Detroit on Christmas Day in 2009. As noted by a government expert interviewed for that article: “We had assumptions about how a terror group [AQAP] operates. It was a major analytic failure.”113 In the view of another expert, the problem was not tied to lack of awareness of key indicators, but in how the evidence was interpreted and weighted.114

Another limitation is that terrorism is characterized by uncertainty and complexity. This poses challenges for terrorism risk assessments generally. For example, while terror groups will at times telegraph or demonstrate their intent and capabilities, they also often hide the same so they can engage in surprise or increase the likelihood that an operation will succeed. As a result, there will always be limits about what can be known about the activity and plans of terror networks, and the precise nature of the risk(s) they pose. This affirms the importance of identifying and being honest about gaps and assumptions and scrutinizing terror risk assessment findings through the lens of what we do not know.

The dynamic and evolving nature of today’s information, security, and technology landscapes also make it challenging for governmental efforts that involve bureaucracy and coordination to keep pace with volatile terror threats. As noted by Kim Cragin, “The JRAM does not work well for dynamic risks like terrorism.”115 A key part of the challenge is that changes in terror risk can be driven by the actions of terror networks, by changing environmental conditions (e.g., a coup, economic shifts, etc.), by operations conducted by counterterrorism forces,g or by other factors. Approaches like JRAM need to be able to iterate at pace and strategically take stock of noteworthy developments and interactions, and how those changes may impact a prior terror risk assessment finding.

While the JRAM, and public information about the method, contain helpful reflections about how the approach leverages qualitative inputs, how the process makes use of quantitative data is not clear.h The issue is important to consider as analysis of quantitative terrorism and CT data and information about changing environmental conditions can be conducted at speed or automated, and that type of data can be leveraged to supplement, or enrich, the JRAM process, or to make it more dynamic and responsive. Automated analysis of these types of data could be used to identify, or alert, practitioners about important changes or anomalies, or to validate risk assessment findings or highlight areas where data and perspectives diverge. For example, terror incident data could be leveraged to baseline risk assessments or to alert analysts about key changes in the scale, frequency, focus, reach, and lethality of specific terror networks. Key environmental indicators, such as the Fragile States Index, could be used to identify if conditions in a certain country or region are getting worse or improving, dynamics that have implications for terrorism. It is important to remember that food affordability and changes in the price of wheat are viewed as key factors that contributed to the rise of the Arab Spring, a huge development that led to violence and political change across North Africa and parts of the Middle East.116 Structured data on counterterrorism operations, such as the number of strikes, raids or arrests, can be used in a similar way to provide a more up-to-date picture on the kinetic pressure placed against terror nodes, which has a bearing on their capabilities and near-term risk.

This short discussion highlights strengths and limitations of the JRAM and several important issues that are important to consider as the United States works to modernize its approach to terrorism risk. This includes the importance of surfacing biases and assumptions; creating pathways to integrate the perspectives of other experts, potentially even those outside of government, to refine views on risk or interrogate findings; and leveraging the power of different sources and types of data, especially quantitative data, and approaches (e.g., automation) to augment, enhance, facilitate iteration, or add dynamism to existing risk approaches.

Part III: Operationalizing Terrorism Risk for CT – A Case and Perspective

To make more concrete how alternative definitions of risk can be employed in the CT fight, we next consider a case study using Bayes risk to arrive at the optimal decision under uncertainty. The use of Bayesian risk calculations allows analysts to combine both subject matter expertise alongside a risk formula that is consistent with how mathematicians and statisticians understand and quantify risk. Here, we demonstrate not only how Bayesian risk can be employed but also highlight how proper data collection methods and understanding of the academic literature can help sharpen the necessary components of a risk model.

As previously discussed, a statistical definition of risk is not only a function of the threat; rather, it seeks to quantify the average loss that would occur if a unit takes a given decision. To a practitioner, this means that risk is not solely in the realm of the intelligence section for a unit, but a joint product derived from an understanding of the probability of events occurring, the action space of allowable responses, and the loss that would occur if you took that action.

In our hypothetical case study, we assume that intelligence analysts had been examining country X for some time and determined that there were three possible situations inside of the country. The first, which we will refer to as O_1 is that there are no insurgents that pose a threat to U.S. forces. The second, O_2, is that there are a few sleeper cells and in the presence of U.S. forces they will activate. The third, O_3, is that there is an active threat that is planning against the United State directly.

Based on the research that the analysts have conducted, they believe that the probability of O_1 being true is 30% (denoted as P(O_1)=0.3), further P(O_2)=0.5 and P(O_3) = 0.2. The analysts further assess that if O_1 was true, there is a 90% chance they will observe no vehicle traffic between two locations, and there is a 10% chance they will observe some vehicle traffic. For O_2 there is a 10% chance they will observe no vehicle traffic (90% chance they will observe some) and for O_3 there is a 50% chance they will observe no traffic.

The operations section then states that there are three possible actions: We should conduct a raid only if we observe traffic on the ground, we should raid either way, or we should continue to monitor. If we conduct a raid, but O_1 was true, we will incur a loss of 20 lives. If we do not conduct a raid but O_1 was true, no loss is incurred. Subsequently, if we raid and O_2 is true then we lose 100 lives, but if O_2 is true and we fail to raid then we would lose 200 lives. Finally, if we raid and O_3 is true we lose say 80 lives, but if we fail to raid and O-3 is true we would lose 300 lives.

So, we make the decision that we will raid only if we observe traffic on the ground. We then can calculate the risk surrounding that decision as:

R(raid only if traffic on ground) = P(O_1 is true given there’s traffic on ground)*20 +

P(O_2 is true given there’s traffic on ground) * 100 +

P(O_3 is true given there’s traffic on ground)*80 +

P(O_1 is true given there’s no traffic on ground)*0 +

P(O_2 is true given there’s no traffic on ground)*200 +

P(O_3 is true given there’s no traffic on ground)*300

To complete this calculation, we need to calculate P(O_i is true given there’s traffic). To do this, we rely on Bayes theorem.

P(O_i is true given there’s traffic) = P(Traffic given O_i)*P(O_i from analyst belief) / P(Traffic)

P(O_1 given traffic) = (.10 * .3) / (.10*.3 + .90*.5 + .5*.2) = 0.05

P(O_2 given traffic) = (.90 * .5) / (.10*.3 + .90*.5 + .5*.2) =0.77

P(O_3 given traffic) = 0.172

We can continue on to find:

P(O_1 given no traffic) = 0.64

P(O_2 given no traffic) = 0.12

P(O_3 given no traffic) = 0.24

In total, then, the risk associated with this decision is:

R(raid only if traffic on ground) = 187.76

We could then compare this to the decision to always raid regardless of what is on the ground:

R(raid either way) = P(O_1 is true given there’s traffic on ground)*20 +

P(O_2 is true given there’s traffic on ground) * 100 +

P(O_3 is true given there’s traffic on ground)*80 +

P(O_1 is true given there’s no traffic on ground)*20 +

P(O_2 is true given there’s no traffic on ground)*100 +

P(O_3 is true given there’s no traffic on ground)*80

Here the probabilities stay the same, however the loss functions differ as the action is different.

R(raid) = 135.76

Finally, we can look at the decision to not raid either way.

R(don’t raid) = P(O_1 is true given there’s traffic on ground)*0 +

P(O_2 is true given there’s traffic on ground) * 200 +

P(O_3 is true given there’s traffic on ground)*300 +

P(O_1 is true given there’s no traffic on ground)*0 +

P(O_2 is true given there’s no traffic on ground)*200 +

P(O_3 is true given there’s no traffic on ground)*300

Again, the probabilities remain constant however the loss changes.

R(don’t raid) = 301.06

The decision, then, that incurs the least risk would be to raid regardless of the actions on the ground. To summarize, in order to calculate risk, we need:

Prior probabilities that analysts generate of different states of the world

In our example the probability of O_1, O_2, or O_3

Understand the probability of observing different situations given the states of the world

The probability of observing traffic given O_1, O_2, or O_3 were true

Finally, we need to know the actions we could take and the loss that we would incur given the different states of the world

The # of casualties incurred if we act or if we fail to act given all the states

While there are multiple calculations that need to be done throughout the process, the calculations are relatively straightforward and offer both analysts and decision makers to also generate ranges of risk based on uncertainty. For instance, if an analyst says there is about a 20-30% chance of O_1 being true, the calculations can be performed at each of these levels to provide a range in risk values.

While this example focuses on a tactical action, the same calculations can be performed at the operational or strategic level. Here, the states of nature (parameters) may focus more on strategic actors, and the actions would correspond to, say, moving forces into a region or employing a special operations task force.

The majority of the scientific literature appears to focus on properly quantifying the probability of outcomes occurring at different risk values, which is a necessary component, however it is insufficient to properly calculate risk. The key here is that risk should not be confined to a single staff section and should be calculated leveraging the subject matter expertise of analysts as well as the operational insight of others on the staff.

Critical in this too are consistent definitions of loss. In our toy example, we used human lives, which is a natural measure; however, other times financial loss or reputational loss would also occur depending on the action conducted. More emphasis, then, should be on formally defining what loss means for a given unit. It must remain consistent if different scenarios are analyzed.

While these calculations are perhaps more simplistic than some presented in literature, we propose that simplicity is preferable here. In communicating with a wide range of audiences, the typical risk calculations provided above are easily explained and argued. If an analyst feels that the prior probabilities are incorrect, anyone with a calculator can make the adjustment without relying on a black box algorithm that may be hiding unrealistic assumptions.

This is not to fully argue that automation and AI have no role in our risk calculations. Rather, as operational units define the probabilities of different outcomes occurring, a properly established data pipeline can update the components of risk in real time. Further, if units decide to change their loss functions or to change their situations that may arise from different states of nature, a properly configured algorithm should be able to automatically adjust.

However, the complex nature of risk does not necessarily require a complex algorithm to understand. Mathematics and probability can assist us in establishing the correct calculations for risk, but cannot replace the subject matter expertise that is needed to generate prior probabilities, define outcomes we would expect to see given states of nature, or generate the possible actions/losses that would occur at differing states of nature. Further, proper data can help us refine the various components that are necessary in computing the Bayes risk for a given action. Recall that Bayesian risk is a factor of knowing what the various states of nature could be (what the parameter space is), what analysts believes are the various probabilities of those states of nature (the prior beliefs), the likelihood of various actions given that the states of natures are correct (the likelihood function), and the cost of performing an action if we are correct or incorrect about our belief of the state of nature (the loss function). Here, we decompose these components and show how proper data collection and data management can assist in better sharpening these elements.

First, we focus on knowing what the various states of nature might be. That is, prior to any risk calculations we have to know the various possibilities for what may be occurring in a given region (or within a given network). To determine this, an analyst is limited by their creativity and historical knowledge. Here, it may be possible to leverage generative AI to assist in brainstorming the various possibilities in a given region. Note that at this stage, we do not seek to assign probabilities to these states of nature, but rather just to explore the possibilities.

The second aspect is to assign prior probabilities to these parameters. That is, based on the research that the analyst has performed, how likely are each of these outcomes to occur. This can be done in a variety of ways and often the risk calculations that exist in literature can be leveraged to assist in these calculations. For example, in Cragin, the author proposes using risk to mission * risk to force/(Previous Strikes +1) as a measure of risk. Here, if the parameter space is geographically focused, meaning one state of nature is there is an active threat against the United States in country A and another state is that there is an active threat in country B, the measure the author proposes could serve as the prior probabilities of the states of nature being true.

Perhaps the place that data and literature best serves is in the next component of the Bayesian risk calculation, the likelihood of various actions given that the states of nature are correct. Here, we need to not only define what data we might expect to see for various parameters, we also need to define how likely it is that we observe those data if the parameter is true. For instance, in the Hawkes processes defined above, structure can be placed on the background rate to determine what the causes are of violence emerging. That is, when a terrorist attack has happened in the past, what were perhaps socio-economic or other factors that may have suggested an attack was likely. These then could serve as the types of data that are collected. Once the data sources are determined that will be used to calculate the likelihood, it is imperative that the data remain consistent throughout the entire collection process. That is, all measurements should be standardized, and analysts should hesitate to modify the types or sorts of data that are being used in the calculations.

Historical data can also be leveraged to calculate the loss function. History can serve as a guide to help estimate the expected number of casualties or monetary loss should different actions be taken. Again, forms of generative AI can serve as a white board to assist analysts in determining what the possible actions and costs could be, but human oversight is necessary to ensure that only feasible courses of action are considered and also ensure that loss estimates are accurate.

After the various components of Bayes risk are established, data pipelines can be created to automatically update risk as new data gets observed. That is, as conditions change on the ground, the likelihood functions will change, which will modify the Bayes risk for all actions under consideration. These pipelines can be automated, allowing decision authorities a real-time updating of risk.

Clearly, the vast amount of literature on risk indicates that this is a sticky problem that is easy to conceptualize, but quite difficult to solve. However, there are several areas that an operational unit interested in quantifying risk should focus on. In particular, instead of relying on potentially esoteric statistical calculations, a unit should focus on ensuring the process that they use in quantifying risk is correct and consistent. To do this, we advocate for employing a Bayesian risk model that combines subject matter expertise of analysts along with the operational experience of other staff sections to derive the risk of courses of action under consideration. Further, data standards should be ruthlessly enforced, and automation should be leveraged to assist in establishing data pipelines. While AI undoubtedly has a role in assisting this process, it should focus on modest applications and be used primarily to assist in structuring information from key data piles.

Conclusion: Thoughts on Future Evolution

This article began from a simple but uncomfortable observation: At precisely the moment when U.S. counterterrorism strategy has leaned harder into “risk-based prioritization,” our conceptual and practical tools for assessing terrorism risk remain fragmented, unevenly validated, and only partially aligned with how practitioners actually make decisions. NSM-13 and subsequent policy statements have elevated “risk” to a central organizing principle, even as counterterrorism resources, forward posture, and political attention have declined. That shift narrows the margin for error. It makes the quality of our risk assessments a critical component of national security, a component of CT with an importance on par with our collection and kinetic capabilities.

We argued that improving terrorism risk assessment requires both a clearer conceptual foundation and more disciplined practice. Part I mapped a wide-ranging body of literature, showing that despite definitional variation, most work converges on risk as some function of threat, vulnerability, and consequence. It highlighted a toolbox of approaches (probabilistic risk assessment, Bayesian methods, game theory, power-law, self-exciting processes, structured professional judgment, RTM, and CACC), each with distinctive strengths and blind spots. Part II then examined how one influential practitioner framework, the Joint Risk Analysis Methodology, translates some of these ideas into an institutionalized process that guides the Department of Defense. Part III proposed a way to operationalize terrorism risk using Bayesian risk models, not as a black-box replacement for expert judgment but as a disciplined framework to impose standardization and rigor to assessments regarding assumptions, tradeoffs, and loss.

A key takeaway from these sections is that process matters at least as much as tools or technology.117 We are currently awash in data and surrounded by vendors promising AI-enabled solutions. Yet much of what is being sold concerns only one component of a proper risk calculation: estimating the likelihood of events. Our core claim is that without a coherent and transparent process for defining the states of nature, eliciting and updating prior beliefs, specifying a realistic action space, and rigorously defining loss functions, no volume of data or algorithmic sophistication will save us from mis-specified risk. A more modern terrorism risk posture must therefore begin with basics:

First, practitioners need to properly define the possible states of nature (parameters) relevant to a theater, network, or problem set. This step is inherently creative and interpretive. It can be supported by generative AI that helps analysts explore plausible scenarios and configurations. What AI can do here is expand the imagination; what it cannot do is decide which states of nature are strategically meaningful. The analyst must remain firmly in the loop.

Second, institutions need standardized processes for deriving and eliciting prior probabilities over those states of nature. This is where existing terrorism risk literature can and should be used more systematically to inform priors, rather than sitting on the shelf as an abstract academic exercise. Prior elicitation should be explicit, documented, and revisitable, rather than buried in unspoken assumptions or “gut feeling.” It is also essential that analysts and experts have some degree of calibration when providing assessments and probabilities across different agencies or subcomponents of a command.

Third, risk assessment should move beyond J-2 centric conceptions of threat and be treated as a genuinely whole-of-staff product. Incorporating J-3 and J-5 perspectives is essential to properly defining the courses of action that are actually available and the loss functions associated with them. Risk is not a property of a place or a group alone; it is a property of actions taken under uncertainty. That reality is captured in a Bayesian risk framework, but it should be reflected in institutional practice, not just in equations.

Seen from this vantage point, one of the most striking gaps in the current ecosystem is the disconnect between individual-level and group/network-level terrorism risk assessment. Instruments designed to evaluate individual extremism and mobilization (including but not limited to TRAP-18, ERG22+, DRAVY-3, and related SPJ-based tools) are comparatively more developed, more systematically evaluated against real-world data, and are tightly coupled to operational decision-making. By contrast, tools focused on network or theater-level terrorism risk (such as JRAM-based processes) lean heavily on structured professional judgment without commensurate attention given to bias, calibration, or validation.

A key avenue for future evolution, therefore, lies in building conceptual and practical bridges between these two worlds. Network-level risk frameworks should learn from the methodological rigor and evaluation culture that has grown around individual-based instruments: clearer factor definitions, explicit rating guidance, calibration exercises, and structured feedback loops. Conversely, individual-level tools can benefit from the broader contextual insights generated by network- and place-based approaches. Terrorism risk today is jointly produced by networks, local opportunity structures, and individual trajectories; our assessment approaches should reflect that fact rather than relegating these domains to separate silos.

A second consideration for future work is to develop a framework for assessment that incorporates public opinion, political will, and competing strategic priorities. Terrorism rarely poses a risk to the sovereignty of the nation—models that assess threats may eventually need to consider how much the populace cares, what the pressures would be on policymakers, and how tactical, operational, and strategic responses take away from competing priorities that may put at risk larger national security objectives. The models heretofore apply both mathematical science and social science to the assessment of terrorism risk; there is also a nuanced art to understanding the human nature involved in deciding how hard and how fast to respond when developing a deterrent in a resource constrained environment.

A third priority for future work is systematic evaluation of prior U.S. government terrorism risk judgments and scores. At present, we know relatively little about how accurate our institutional risk assessments have been, where they have consistently over- or under-estimated threats, or how biases and assumptions have played out over time. Retrospective studies that compare earlier risk ratings, JRAM outputs, or NSM-13-aligned prioritizations with subsequent attack patterns, plots, or operational outcomes would provide an empirical basis for refining both processes and models. Such work not only improves calibration; it may also build institutional humility and transparency about the limits of foresight in a domain characterized by strategic interaction and deep uncertainty.

Finally, we have suggested a role for automation and AI in the evolution of terrorism risk assessment. Rather than chasing comprehensive “AI solutions” to risk, operational units would do better to enforce rigorous data standards, build automated data pipelines that update key components of Bayes risk in near real time (likelihoods, relevant indicators, environmental indices, and data on CT operations), and deploy AI primarily as a tool for structuring information, identifying anomalies, and supporting prior elicitation. It should not, however, be the final arbiter of risk. This division of labor plays to the strengths of both humans and machines: analysts and operators define the problem, the states of nature, and the loss landscape. Humans also bring context, nuance and an understanding of outliers to the system. Algorithms help keep the inputs current, disciplined, and consistent.

The argument here is not that a Bayesian risk model, or any other single framework, can resolve the profound uncertainties that define terrorism. It is that risk assessment must be treated as an explicit, structured, and contestable process, one that transparently integrates subject-matter expertise, operational judgment, and the best available data and models. In a strategic environment where U.S. counterterrorism efforts are asked to do more with less, the way we conceptualize and calculate terrorism risk is no longer a secondary technical issue; it is a central determinant of where and how we choose to accept risk, and at what potential cost. The reality is the national security enterprise will do less with less, but these statistical models and proper use of data help us ‘do less’ better and with greater efficiency. If the United States is to remain serious about “risk-based” counterterrorism, then terrorism risk assessment itself must be modernized (conceptually, institutionally, and technologically) to match the complexity and dynamism of the threats it seeks to understand. CTC

Don Rassler is an Assistant Professor in the Department of Social Sciences and Director of Strategic Initiatives at the Combating Terrorism Center at the U.S. Military Academy. His research interests are focused on how terrorist groups innovate and use technology; counterterrorism performance; and understanding the changing dynamics of militancy in Asia. X: @DonRassler

COL(R) Nicholas Clark, Ph.D., is an Associate Professor in the Department of Mathematics at the University of St. Thomas (Minnesota). Prior to joining St. Thomas, COL(R) Clark served as an Associate Professor at the United States Military Academy at West Point from 2016 to 2024, where he founded and led the Applied Statistics and Data Science Program. In 2021, he created a curriculum in data literacy that is now the widest adopted program in the U.S. Army. Prior to his academic appointments, COL(R) Clark served as an intelligence officer for multiple units with U.S. Special Operations Command (SOCOM).

Colonel Sean Morrow has served as the Combating Terrorism Center’s director since January 2021. He has served in a variety of roles in the U.S. military including as Battalion Commander in the United Nations Command in Korea and a Battalion Operations officer and a Brigade Executive Officer for the 10th Mountain Division in southeastern Afghanistan. X: @SeanMorrow_

© 2025 Don Rassler, Nicholas Clark, Sean Morrow

Substantive Notes

[a] When it comes to terrorism risk, different U.S. government agencies use different risk calculation formulas. For example, the Department of Homeland Security has defined terror risk as a function of threat, consequence, and vulnerability, while the Department of Defense has viewed strategic terror risk as being a function of threat and consequence.

[b] Risk of taking Action A = Loss if Action A is taken given state of nature 1 is true * likelihood that state of nature 1 is true given we observe data on the ground * Analysts prior belief that state of nature 1 is true + Loss if Action A is taken given state of nature 2is true * likelihood that state of nature 2 is true given we observe data on the ground * Analysts prior belief that state of nature 2 is true + etc.

[c] As noted by Dean and Pettet, “‘professional judgement’ is an amalgam of ‘evidence base’ and ‘tacit knowledge,’ which gets combined in the mind of the analyst to an unknowable extent and which in turn ends up as a final judgement call of the presumed ‘risk level’ a PoC” [person of concern] or group “may pose to the community.” Geoff Dean and Graeme Pettet, “The 3 R’s of risk assessment for violent extremism,” Journal of Forensic Practice 19:2 (2017).

[d] As noted by Jeff Gruenewald and his co-authors, “RTM relies on determining the spatial influence that risk factors have on the environment through two processes: proximity and density.” See Jeff Gruenewald et al., “Innovative Methodologies for Assessing Radicalization Risk: Risk Terrain Modeling and Conjunctive Analysis,” National Criminal Justice Reference Service, November 2021.

[e] In another publication, Mazarr expounded on this idea: “In a June 2012 article in the Harvard Business Review, Robert Kaplan and Anette Mikes suggested that the sort of hard-boiled confrontations so essential to real risk discussions are rare, and in fact an unnatural act for most human beings. They point to organizations that create rough-and-tumble dialogues of intellectual combat designed to ensure that risks are adequately identified and assessed. These can involve outside experts, internal review teams or other mechanisms, but the goal is always to generate rigor, candor and well-established procedures for analysis. The result ought to be habits and procedures to institutionalize what Jonathan Baron, professor of psychology at the University of Pennsylvania, has called ‘actively open-minded thinking’—a combination of a thorough search for information and true open-mindedness to any possibility, while avoiding self-deception through rigorous consideration of alternatives.” Michael J. Mazarr, “The True Character of Risk,” Risk Management, June 1, 2016.

[f] They can also be further problematized by other human factors. For example, as also noted by Mazarr: “Risk failures are mostly attributable to human factors—things like overconfidence, personalities, group dynamics, organizational culture and discounting outcomes—that are largely immune to process.” See Mazarr, “The True Character of Risk.”

[g] Cragin proposed a strategic risk model to account for how the “iterative nature of counterterrorism” as reflected in strikes and raids impacts terrorism risk. The model defines strategic risk as risk to mission and risk to force divided by the number of previous strikes (or raids) plus one. See Kim Cragin, “A Better Way to Talk About Risk,” Lawfare, July 6, 2025.

[h] According to one document that explains the JRAM, “most quantification serves to bound, not measure risk.” “Joint Risk Analysis Manual Slides.”

Citations

[1] Gia Kokotakis, “Biden Administration Declassifies Two Counterterrorism Memorandums,” Lawfare, July 5, 2023.

[2] Nicholas Rasmussen, “Navigating the Dynamic Homeland Threat Landscape,” Remarks given at Washington Institute for Near East Policy, May 18, 2023.

[3] Harun Maruf, “US counterterror official warns of growing global threat,” Voice of America, February 15, 2025.

[4] For example, see Aaron Clauset and Kristian Skrede Gleditsch, “The Developmental Dynamics of Terrorist Organizations,” PLoS ONE 7:11 (2012).

[5] See, for example, Ismail Onat and Zakir Gul, “Terrorism risk forecasting by ideology,” European Journal on Criminal Policy and Research 24:4 (2018).

[6] Clauset and Gleditsch.

[7] Ibid.

[8] For examples of broad-based and specific evaluations of some of these types of tools, see “Countering Violent Extremism: The Use of Assessment Tools for Measuring Violence Risk,” RTI International, March 2017; Ghayda Hassanm et al., “PROTOCOL: Are tools that assess risk of violent radicalization fit for purpose?” Campbell Systematic Reviews, 2022; Adrian Cherney and Emma Belton, “Testing the reliability and validity of the VERA-2R on individuals who have radicalised in Australia,” Report to the Criminology Research Advisory Council Grant: CRG 40/21–22, June 2024.

[9] For an overview of these nine instruments, see Anna Clesle, Jonas Knäble, and Martin Rettenberger, “Risk and Threat Assessment Instruments for Violent Extremism: A Systematic Review,” Journal of Threat Assessment and Management 12:1 (2025). See also Caroline Logan, Randy Borum, and Paul Gill eds., Violent Extremism: A Handbook of Risk Assessment and Management (London: University College London Press, 2023); Caitlin Clemmow et al., “The Base Rate Study: Developing Base Rates for Risk Factors and Indicators for Engagement in Violent Extremism*,” Journal of Forensic Sciences 65:3 (2020).